By Michael Roberts

About this time last year, I tackled the subject of artificial intelligence (AI) and the impact of the new generalised intelligence language learning models (LLMs) like ChatGPT etc.

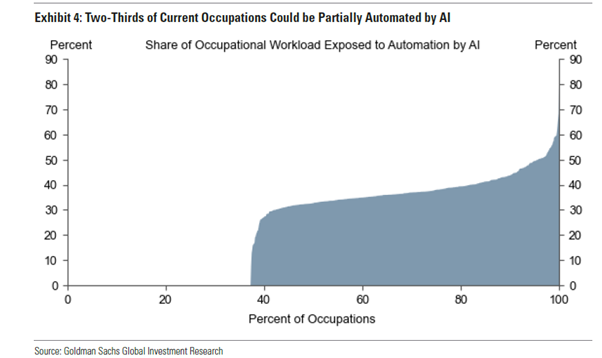

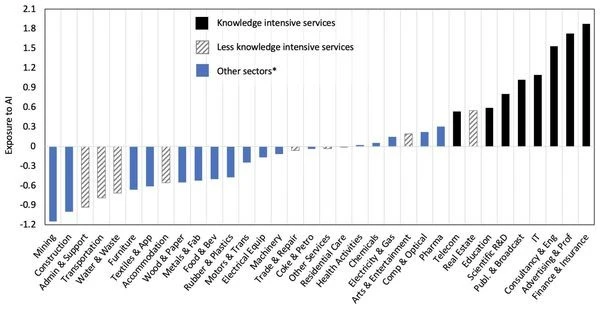

In that post I mainly dealt with the impact on jobs for workers being replaced by AI LLMs and the corresponding effect on boosting the productivity of labour. The standard forecast on AI came from the economists at Goldman Sachs, the major investment bank. They reckoned if the technology lived up to its promise, it would bring “significant disruption” to the labour market, exposing the equivalent of 300m full-time workers across the major economies to automation of their jobs. Lawyers and administrative staff would be among those at greatest risk of becoming redundant (and probably economists!). They calculated that roughly two-thirds of jobs in the US and Europe are exposed to some degree of AI automation, based on data on the tasks typically performed in thousands of occupations.

Prospects for AI excited the economists at Goldman Sachs

Most people would see less than half of their workload automated and would probably continue in their jobs, with some of their time freed up for more productive activities. In the US, this would apply to 63% of the workforce, they calculated. A further 30% working in physical or outdoor jobs would be unaffected, although their work might be susceptible to other forms of automation.

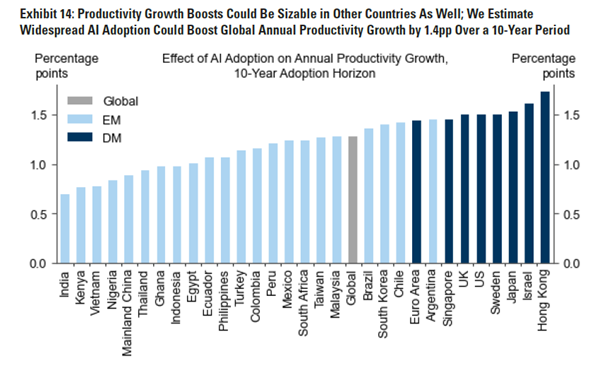

But Goldman Sachs economists were very optimistic and euphoric for the productivity gains that AI could achieve, possibly taking capitalist economies out of the relative stagnation of the last 15-20 years – the Long Depression. GS claimed that ‘generative’ AI systems such as ChatGPT could spark a productivity boom that would eventually raise annual global GDP by 7% over a decade. If corporate investment in AI continued to grow at a similar pace to software investment in the 1990s, US AI investment alone could approach 1% of US GDP by 2030.

But US technology economist Daren Acemoglu was sceptical then. He argued that not all automation technologies actually raise the productivity of labour. That’s because companies mainly introduce automation in areas that may boost profitability, like marketing, accounting or fossil fuel technology, but not raise productivity for the economy as a whole or meet social needs.

Acemoglu takes a critical look at the claims for AI

Now in a new paper, Acemoglu pours a good dose of cold water on the optimism engendered by the likes of GS. In contrast to GS, Acemoglu reckons that the productivity effects from AI advances within the next 10 years “will be modest”. The highest gain he forecasts would be just a total 0.66% rise in total factor productivity (TFP), which is the mainstream measure for the impact of innovation, or about a tiny 0.064% increase in annual TFP growth. It could even be lower as AI cannot handle some harder tasks that humans do. Then the rise could be just 0.53%. Even if the introduction of AI raised overall investment, the boost to GDP in the US would be only 0.93-1.56% in total, depending on the size of the investment boom.

Moreover, Acemoglu reckons that AI will widen the gap between capital and labour income; as he says: “low-education women may experience small wage declines, overall between-group inequality may increase slightly, and the gap between capital and labour income is likely to widen further”. Indeed, AI may actually harm human welfare by expanding misleading social media, digital ads and the IT defense-attack spending. So AI investment may add to GDP but lower human welfare by as much as 0.72% of GDP.

And there are other dangers to labour. Owen David argues that AI is already being used to monitor workers on the job, recruit and screen job candidates, set pay levels, direct what tasks workers do, evaluate their outputs, schedule shifts, etc. “As AI takes on the functions of management and augments managerial abilities, it may shift power to employers.” Shades of the observations of Harry Braverman in his famous book of 1974 on the degradation of work and destruction of skills by automation.

Picking the right priorities for the use of AI

Acemoglu recognises that there are gains to be had from generative AI, “but these gains will remain elusive unless there is a fundamental reorientation of the industry, including perhaps a major change in the architecture of the most common generative AI models.” In particular, Acemoglu says that “it remains an open question whether we need models that engage inhuman-like conversations and write Shakespearean sonnets if what we really want is reliable information useful for educators, healthcare professionals, electricians, plumbers and other craft workers.”

Indeed, because it is managers and not workers as a whole who are introducing AI to replace human labour, they are already removing skilled workers from jobs they do well without necessarily improving efficiency and well-being for all. As one commentator put it: “I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.” Managers are introducing AI to “make management problems easier at the cost of the stuff that many people don’t think AI should be used for, like creative work….. If AI is going to work, it needs to come from the bottom-up, or AI is going to be useless for the vast majority of people in the workplace”.

Is AI going to save the major economies by delivering a big leap forward in productivity? It all depends on where and how AI is applied. A PwC study found productivity growth was almost five times as rapid in parts of the economy where AI penetration was highest than in less exposed sectors. Barret Kupelian, the chief economist at PwC UK, said: “Our findings show that AI has the power to create new industries, transform the jobs market and potentially push up productivity growth rates. In terms of the economic impact, we are only seeing the tip of the iceberg – currently, our findings suggest that the adoption of AI is concentrated in a few sectors of the economy, but once the technology improves and diffuses across other sectors of the economy, the future potential could be transformative.”

Up to 20 years adoption path

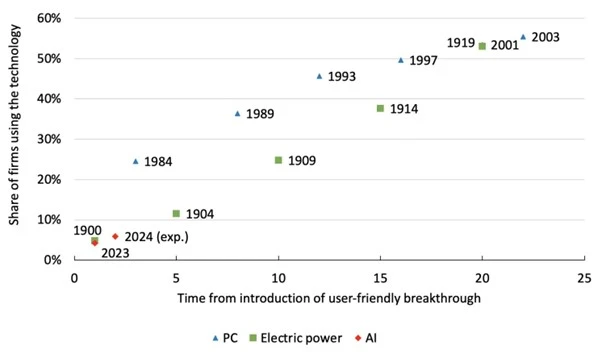

OECD economists are not so sure that is right. In a paper they pose the problem: “how long will the application of AI into sectors of the economy take? The adoption of AI is still very low, with less than 5% of firms reporting the use of this technology in the US (Census Bureau 2024). When put in perspective with the adoption path of previous general-purpose technologies (e.g. computers and electricity) that have taken up to 20 years to be fully diffused, AI has a long way to go before reaching the high adoption rates that are necessary to detect macroeconomic gains.”

“Findings at the micro or industry level mainly capture the impacts on early adopters and very specific tasks, and likely indicate short-term effects. The long-run impact of AI on macro-level productivity growth will depend on the extent of its use and successful integration into business processes.” The OECD economists point out that it took 20 years for previous ground-breaking technologies like electric power or PCs to ‘diffuse’ sufficiently to make a difference. That would make the 2040s for AI.

Moreover, AI by replacing labour in more productive, knowledge-intensive sectors, could cause “an eventual fall in the employment shares of these sectors (that) would act as a drag on aggregate productivity growth,”

And echoing some the arguments of Acemoglu, the OECD economists suggest that “AI poses significant threats to market competition and inequality that may weigh on its potential benefits, either directly or indirectly, by prompting preventive policy measures to limit its development and adoption.”

Hundreds of thousands of chips required for AI systems

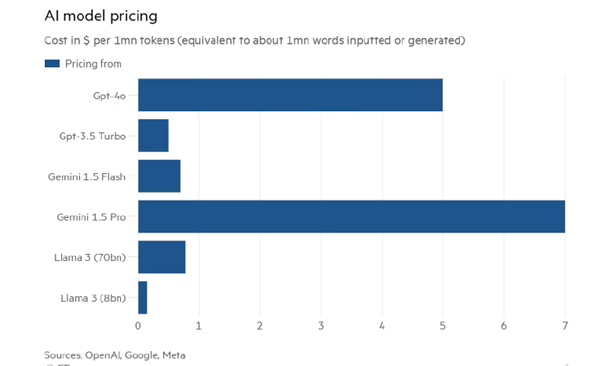

And then there is the cost of investment. Just gaining access to the physical infrastructure needed for large scale AI can be a challenge. The sort of computer systems needed to run an AI for cancer drug research typically require between two and three thousand of the latest computer chips. The cost of such computer hardware alone could easily come in at upwards of $60m (£48m), even before costs for other essentials such as data storage and networking. A big bank, pharmaceutical firm or manufacturer might have the resources to buy in the tech it needs to take advantage of the latest AI, but what about a smaller firm?

So contrary to the conventional view and much more in line with Marxist theory, the introduction of AI investment will not lead to a cheapening of fixed assets (constant capital in Marxist terms) and therefore a fall in ratio of fixed asset costs to labour, but the opposite (ie a rising organic composition of capital). And that means further downward pressure on average profitability in the major economies.

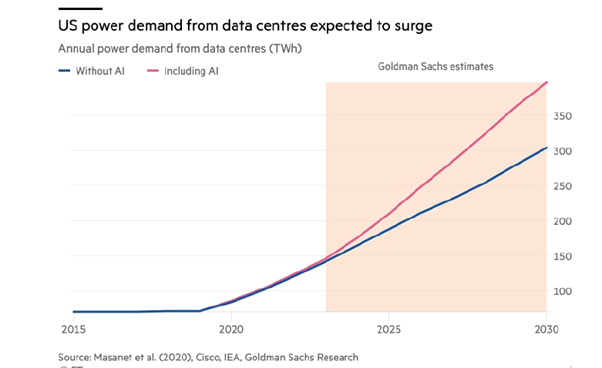

Consuming 6,000 times more energy than a European city

And there is the impact on global warming and energy use. Large language models such as ChatGPT are some of the most energy-guzzling technologies of all. Research suggests, for instance, that about 700,000 litres of water could have been used to cool the machines that trained ChatGPT-3 at Microsoft’s data facilities. Training AI models consumes 6,000 times more energy than a European city. Furthermore, while minerals such as lithium and cobalt are most commonly associated with batteries in the motor sector, they are also crucial for the batteries used in datacentres. The extraction process often involves significant water usage and can lead to pollution, undermining water security.

Data centres will make up 9 per cent of US power demand by 2030

Grid Strategies, a consultancy, forecasts US electricity demand growth of 4.7 percent over the next five years, nearly doubling its projection from a year earlier. A study by the Electric Power Research Institute found that data centres will make up 9 per cent of US power demand by 2030, more than double current levels.

Already that prospect is leading to a slowdown in plans to retire coal plants as power demand from AI surges.

Maybe these investment and energy costs can be reduced with new AI developments. Swiss technology firm Final Spark has launched Neuroplatform, the world’s first bioprocessing platform where human brain organoids (lab-grown miniaturized versions of organs) perform computational tasks instead of silicon chips. The first such facility hosts the processing prowess of 16 brain organoids, which the company claims uses a million times less power than their silicon counterparts. This is a frightening development in one sense: human brains! But luckily it is a long way from implementation. Unlike silicon chips, which can last for years, if not decades, the ‘organoids’ only last 100 days before ‘dying’.

Contrary to the GS economists, those at the frontier of AI development are much less sanguine about its impact. Demis Hassabis, head of Google’s AI research division puts it: “AI’s biggest promise is just that — a promise. Two fundamental problems remain unsolved. One involves making AI models that are trained on historic data, understand whatever new situation they are put in and respond appropriately.” AI needs to be able to “understand and respond to our complex and dynamic world, just as we do”.

Can AI understand and respond to our complex world?

But can AI do that? In my previous post on AI, I argued that AI cannot really replace human intelligence. And Yann LeCun, chief AI scientist at Meta, the social media giant that owns Facebook and Instagram, agrees. He said that LLMs had “very limited understanding of logic … do not understand the physical world, do not have persistent memory, cannot reason in any reasonable definition of the term and cannot plan … hierarchically”. LLMs were models learning only when human engineers intervene to train it on that information, rather than AI coming to a conclusion organically like people. “It certainly appears to most people as reasoning — but mostly it’s exploiting accumulated knowledge from lots of training data.”

Aron Culotta, associate professor of computer science at Tulane University, put it another way. “common sense had long been a thorn in the side of AI”, and that it was challenging to teach models causality, leaving them “susceptible to unexpected failures”.

“Let’s stop calling it artificial intelligence” (Noam Chomsky)

Noam Chomsky summed up the limitations of AI relative to human intelligence. “The human mind is not like ChatGPT and its ilk, a lumbering statistical engine for pattern matching, gorging on hundreds of terabytes of data and extrapolating the most likely conversational response of most probable answer to a scientific question. On the contrary, the human mind is a surprisingly efficient and even elegant system that operates with small amounts of information; it seeks not to infer brute correlations among data points but to create explanations. Let’s stop calling it artificial intelligence and call it for what it is ‘plagiarism software’ because it does not create anything but copies existing works, of artists, modifying them enough to escape copyright laws.”

That brings me to what I might call the Altman syndrome. AI under capitalism is not innovation aiming to extend human knowledge and relieve humanity of toil. For capitalist innovators like Sam Altman, it is innovation for making profits. Sam Altman, the founder of OpenAI, was removed from controlling his company last year because other board members reckoned he wanted to turn OpenAI into a huge money-making operation backed by big business (Microsoft is the current financial backer), while the rest of the board continued to see OpenAI as a non-profit operation aiming to spread the benefits of AI to all with proper safeguards on privacy, supervision and control. Altman had developed a ‘for-profit’ business arm, enabling the company to attract outside investment and commercialise its services. Altman was soon back in control when Microsoft and other investors wielded the baton on the rest of the board. OpenAI is no longer open.

The potential of AI under common ownership

Machines cannot think of potential and qualitative changes. New knowledge comes from such transformations (human), not from the extension of existing knowledge (machines). Only human intelligence is social and can see the potential for change, in particular social change, that leads to a better life for humanity and nature. Rather than develop AI to make profits, reduce jobs and the livelihoods of humans, AI under common ownership and planning could reduce the hours of human labour for all and free humans from toil to concentrate on creative work that only human intelligence can deliver.

From the blog of Michael Roberts. The original, with all charts and hyperlinks, can be found here.

The image at the top of the article shows the ‘Grace’ chip released by NVIDIA in 2021 for use in data centres running giant AI. Source: nvidianews.nvidia.com/news